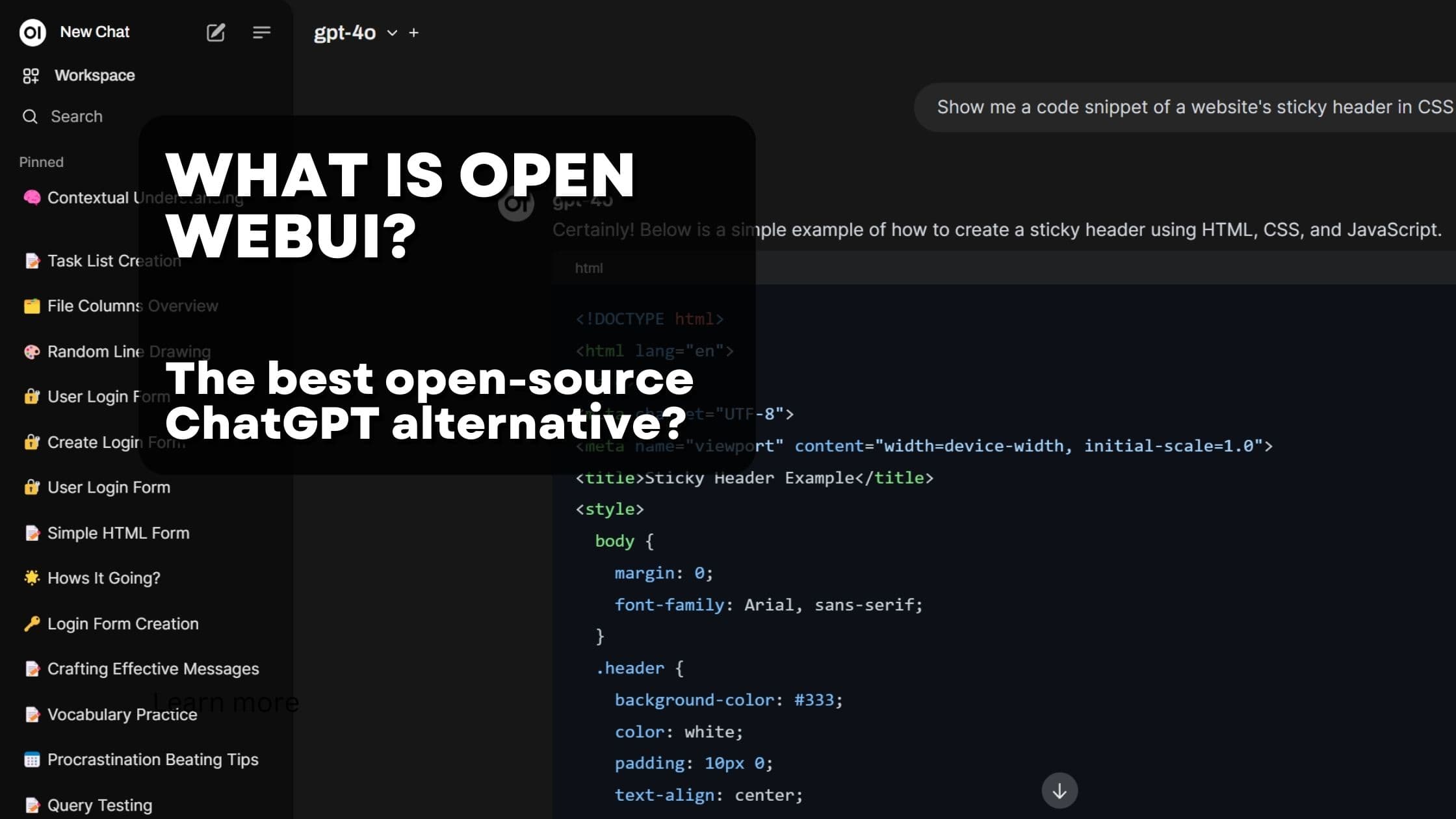

What is Open WebUI? The best self hosted, open source ChatGPT alternative?

ChatGPT is the fastest growing app of all time. It is used by millions of people everyday. However, it has one significant downside: In it's free plan as well as in the paid "Plus" plan, OpenAI will use your data to train their latest models. Just imagine if one of your conversations end up in the next model generation.

While OpenAI provides more expensive plans where they promise not to use your data, the costs in general can add up quickly. Furthermore, interactions with LLM might contain potentially the most secret of data of a company. Many organizations simply can't trust OpenAI with such data.

This is where Open Source ChatGPT alternatives come into play.

One of them is Open WebUI. It is a self-hosted web interface for large language models and positions itself as one of the major alternatives to ChatGPT. Even more so, they claim to be much more than a pure clone - they provide the BEST LLM experience out there.

In this article we will introduce the major features of Open WebUI and will answer the question: Is it really better than "the original" ChatGPT?

Why a self-hosted, open source ChatGPT alternative?

The demand for AI-driven communication tools is higher than ever, and while platforms like ChatGPT have gained significant traction, there are compelling reasons to consider a self-hosted, open-source alternative like Open WebUI.

Here’s why:

-

Enhanced Privacy and Data Control: The main reason above all: Do you trust any Chat-Bot-Provider to meaningfully protect your data? (Note: I don't want to be sarcastic here. For many people the answer might be yes. However, for a lot of companies it is a clear no). Self-hosted solutions provide control over your data, simple as that. With Open WebUI, sensitive information remains within your own infrastructure, minimizing the risks associated with third-party data handling. This is particularly crucial for businesses and individuals concerned about privacy and compliance with regulations such as GDPR and CCPA.

-

Customization and Flexibility: Open-source platforms offer extensive customization options. Open Web UI allows users to change the interface, integrate specific APIs via pipelines, and modify functionalities to suit unique needs. This flexibility is invaluable for developers and organizations looking to create unique AI solutions that align perfectly with their operational and corporate requirements.

-

Cost Efficiency: ChatGPT is not per se expensive. However it costs at least 25$ in the minimum plan which provides some sort of data privacy. And goes up to 70$ for the Enterprise plan (which is what most organizations would need). Assuming you have just a 100 employees company, you'd end up paying between 2500$ and 7000$ per month. Assuming Open WebUI is used with the quite good Azure OpenAI API, you'd only pay for what your users actually use in terms of chat tokens (words). Assuming you serve them the best available model, realistically speaking many of your users will amount to less than 5$ per month.

-

Avoiding Vendor Lock-In: Relying on proprietary platforms can lead to vendor lock-in, where switching providers becomes costly and complex. While this is true for any proprietary software, it is even more so true for AI models. Why? Because every month a new, better one is released. Currently, Claude from Anthropic seems to be the best model on the market. 2 months ago it was GPT-4. If you use ChatGPT, you have to use GPT-4 no matter. Open WebUI allows you to switch between models with the click of a button.

-

Advanced Features and Integration: Beyond the things ChatGPT offers, Open WebUI offers a bunch of intelligent features not seen in other platforms. Among them is their support for running LLM-generated python code in the browser (yes, really!), advanced model management and their superb extendability with pipelines (more on that in a second)

In summary, by using a self-hosted, open-source alternative like Open WebUI, you gain greater control, flexibility, and cost savings, all while benefiting from the collaborative spirit of the open-source community.

What is Open WebUI and what are its major features?

The major selling point of Open WebUI is already discussed in the intro: It is a self hosted, open source alternative to ChatGPT.

Open WebUI is designed to work with various models, and model serving platforms, including Ollama, Anthropic Claude, Llama 3.1 and many more.

While Open WebUI has a striking similarity to the UI of ChatGPT, it goes far beyond just being a clone. In fact, the tool offers so many nice and handy features, that it's impossible to list them all. I'll gladly link to their official documentation. However, I think it's worth mentioning the most important ones here, and providing a quick introduction into how they work. Instead of simply listing all the features, let's make a virtual tour into Open WebUI, with screenshots.

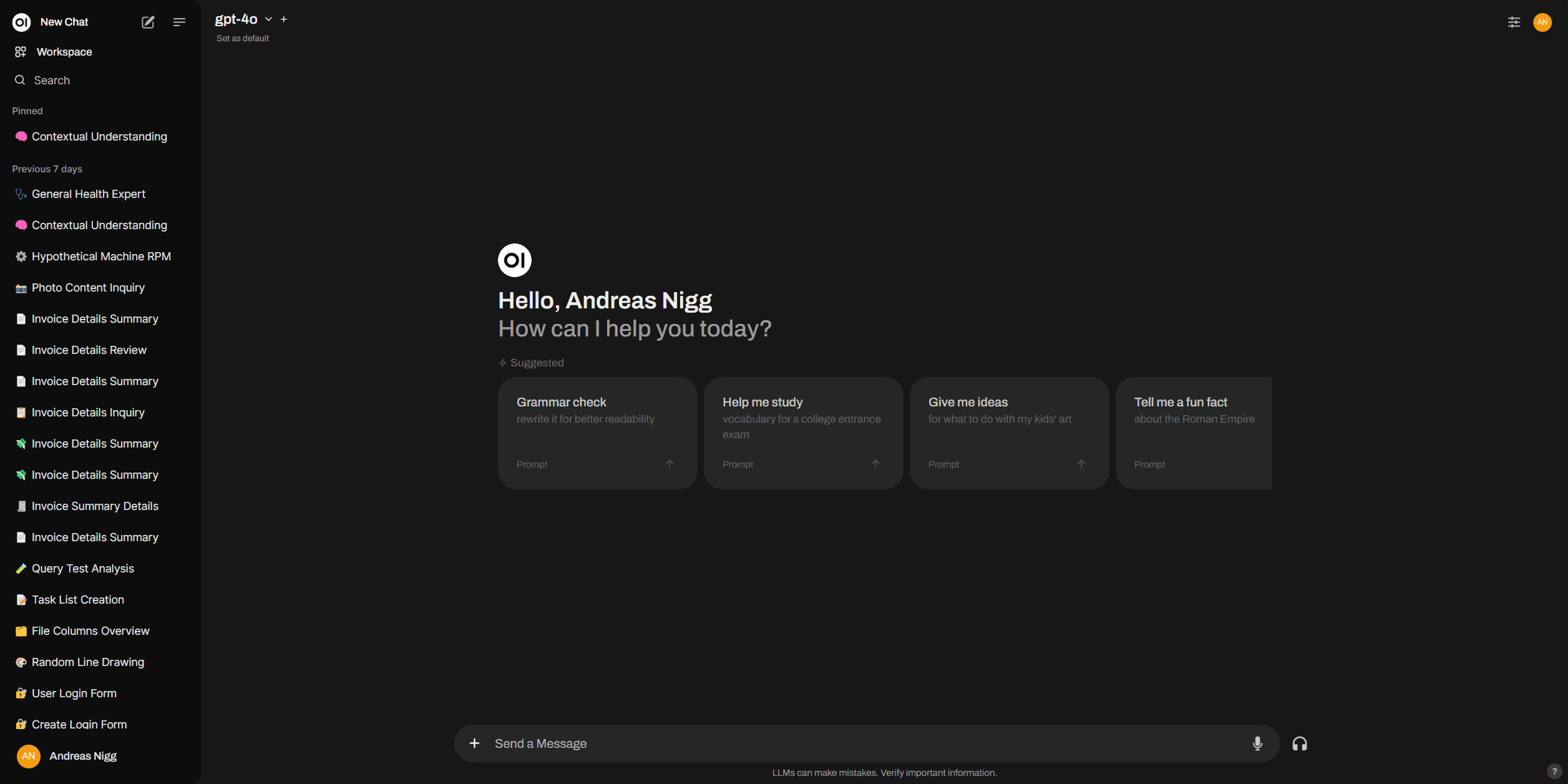

Directly after you've signed in to Open WebUI, you are greeted with a very familiar interface: Your typical LLM chat window.

Open WebUI Chat Interface

Open WebUI Chat Interface

This screen provides all you'd expect from a LLM chat interface: A text input filed, your chat history and buttons to edit the chat history. Open WebUI allows to not only edit the users messages, but also the answers received from the LLM - this is great for few shot learning. Another feature known from ChatGPT are the upvote/downvote buttons - but in the case of Open WebUI, you store the upvotes/downvotes and comments in your database - meaning you can create your own RLHF dataset instead of the one for OpenAI. And you get all the output formatting you'd expect: Markdown, LaTeX, code blocks and even mermaid diagrams.

Open WebUI formatting

Open WebUI formatting

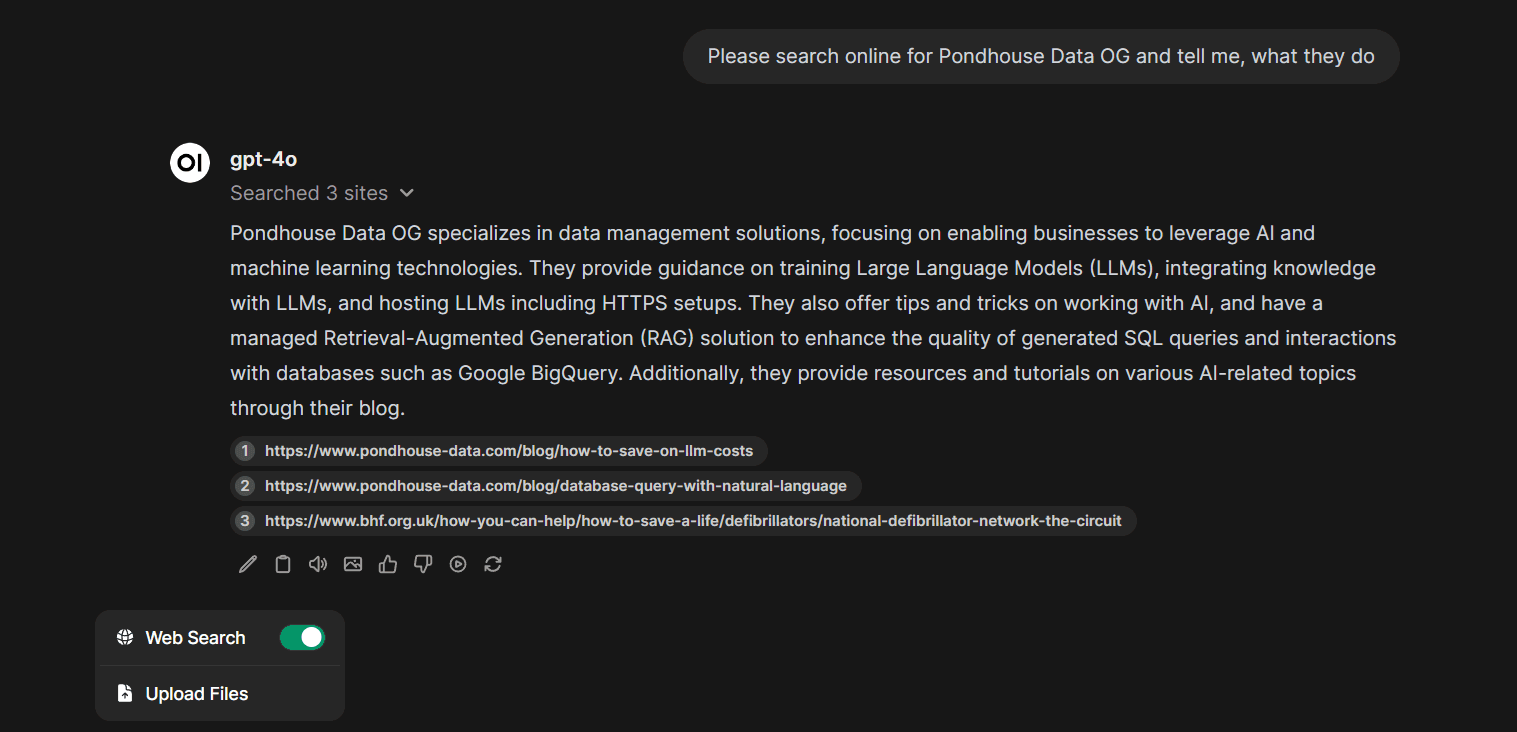

Google Web Search

LLMs have limited knowledge, especially about recent events. Therefore, any good chat UI needs to provide a way to allow LLMs to search the web. Open WebUI provides a Google web search integration, which allows LLMs to search the web for information.

Open WebUI Google Web Search

Open WebUI Google Web Search

Besides Google web search, Open WebUI als offers different search providers like SearchXNG, and Brave Search.

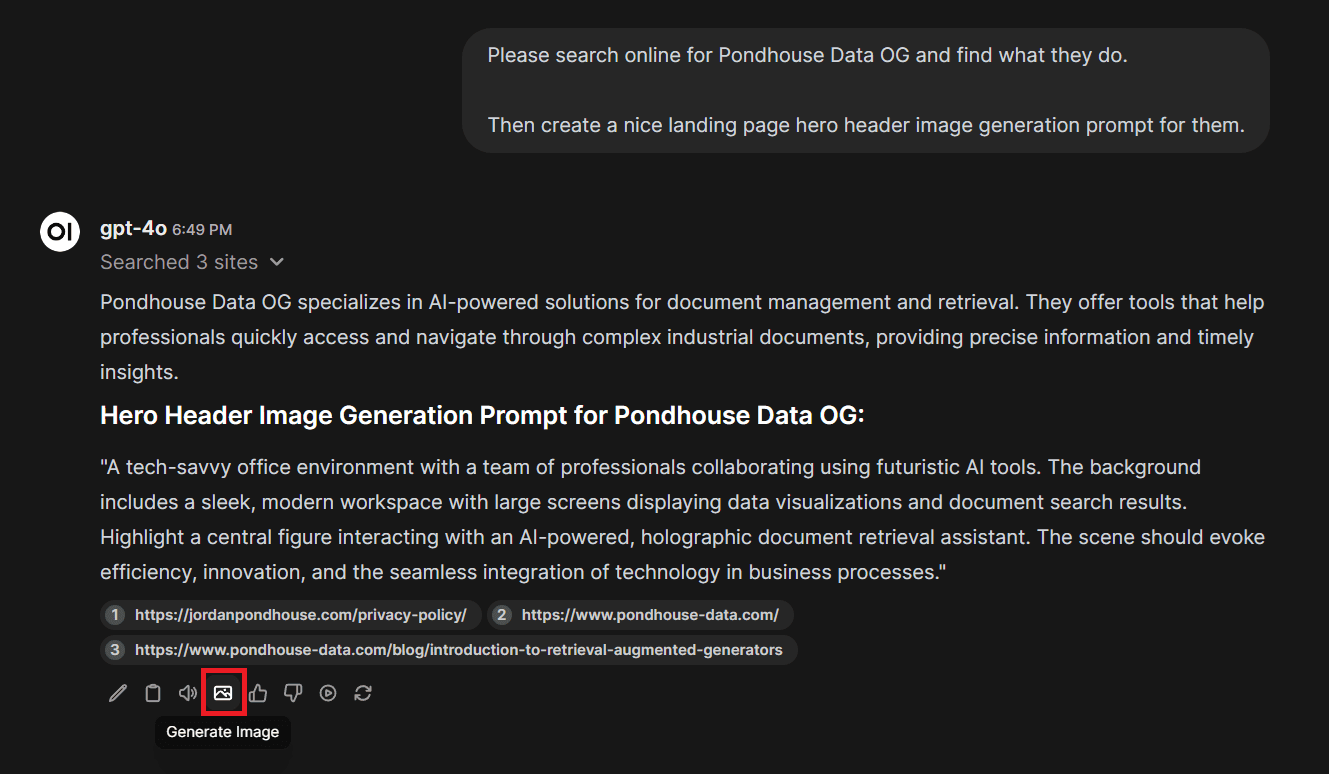

Image generation with Open WebUI

If you use a model with image generation capabilities, Open WebUI allows to natively use this feature. The workflow is a little different than in ChatGPT, where you simply ask for image and the model will create one. Open WebUI allows to create the image generation request from an answer of the LLM. So, you ask the LLM for an image generation prompt, the LLM provides the prompt - as part of the prompt action buttons, you can generate an image.

Open WebUI image generation

Open WebUI image generation

Note: While we like the idea of first generating a prompt and then generating an image from this prompt, we found it a little inconvenient. We think Open WebUI will change this workflow in the future to allow for direct image generation.

There are currently three image generation providers available:

- DALL-E

- AUTOMATIC1111

- ComfyUI (by far our favorite)

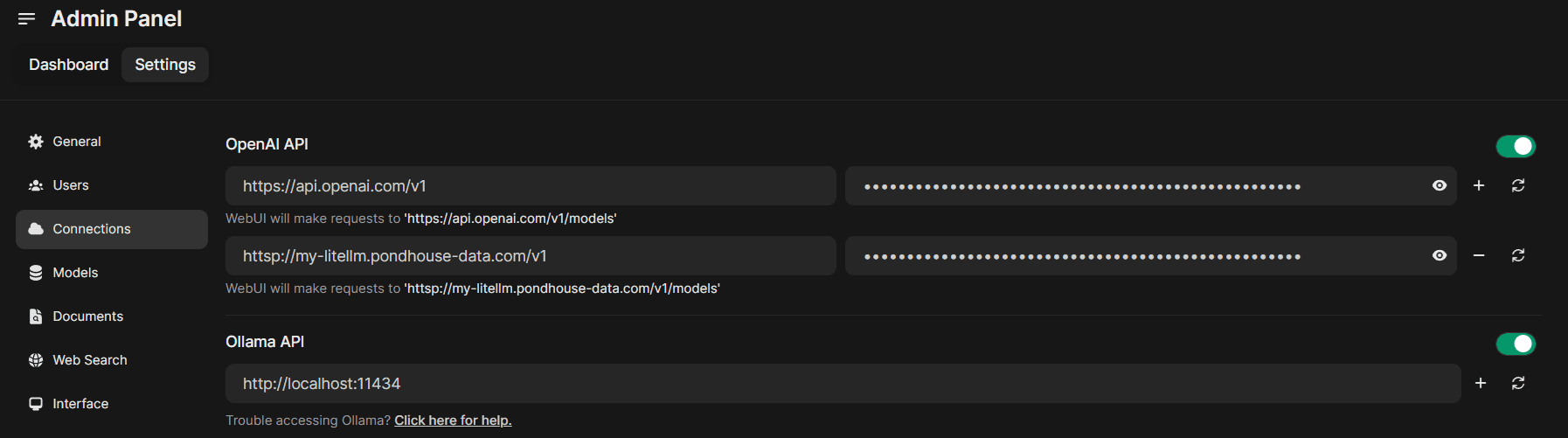

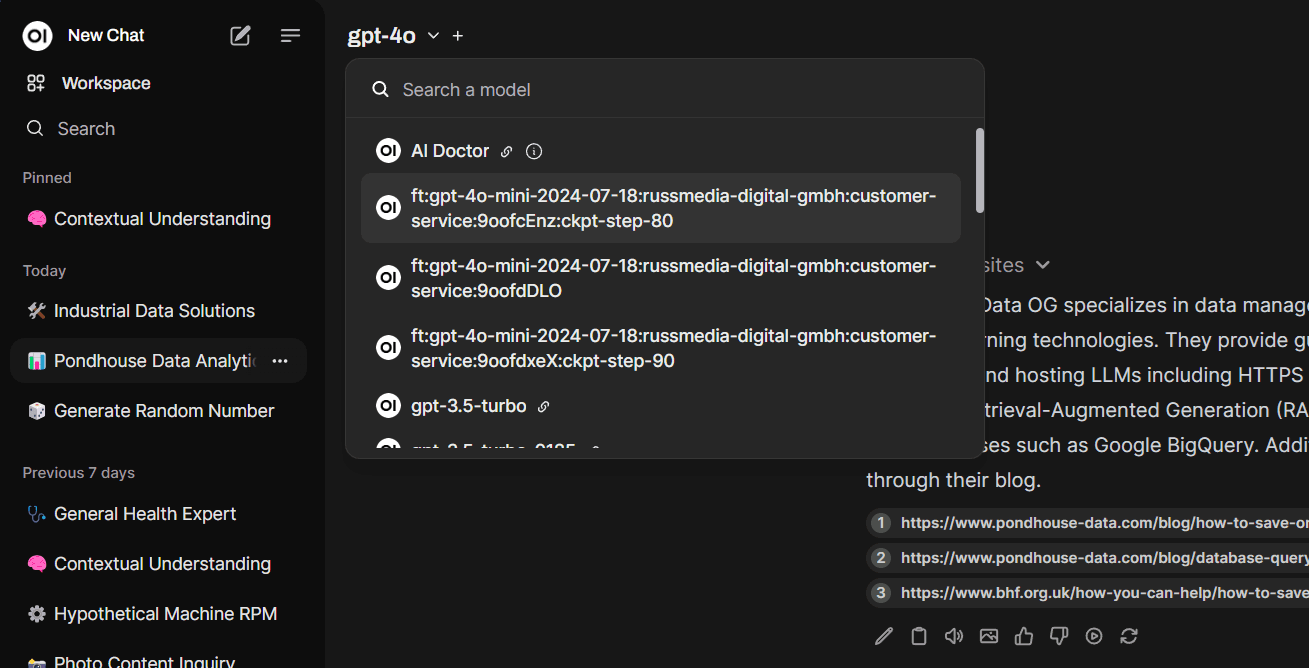

Changing the model and model management

One of the main reasons for using Open WebUI is its capabilities to use any LLM out there you want.

OpenWebUI natively integrates with the OpenAI API as well as with Ollama. As they provide first-class support for OpenAI, any OpenAI-compatible model provider can be used with Open WebUI.

That means, we can use virtually any modern LLM:

- OpenAI can be used directly with Open WebUI

- Any one of the Ollama supported models can be used via Ollama. Among them are all Llama models as well as Mistral models.

- LiteLLM is an AI API proxy which converts inputs in the OpenAI format to different API providers formats. You simply send your OpenAI-compatible request to LiteLLM and it will translate it to the API of your choice. Therefore, any API provider supported by LiteLLM can be used with Open WebUI.

LLM connection settings

LLM connection settings

Changing the model

Changing the model

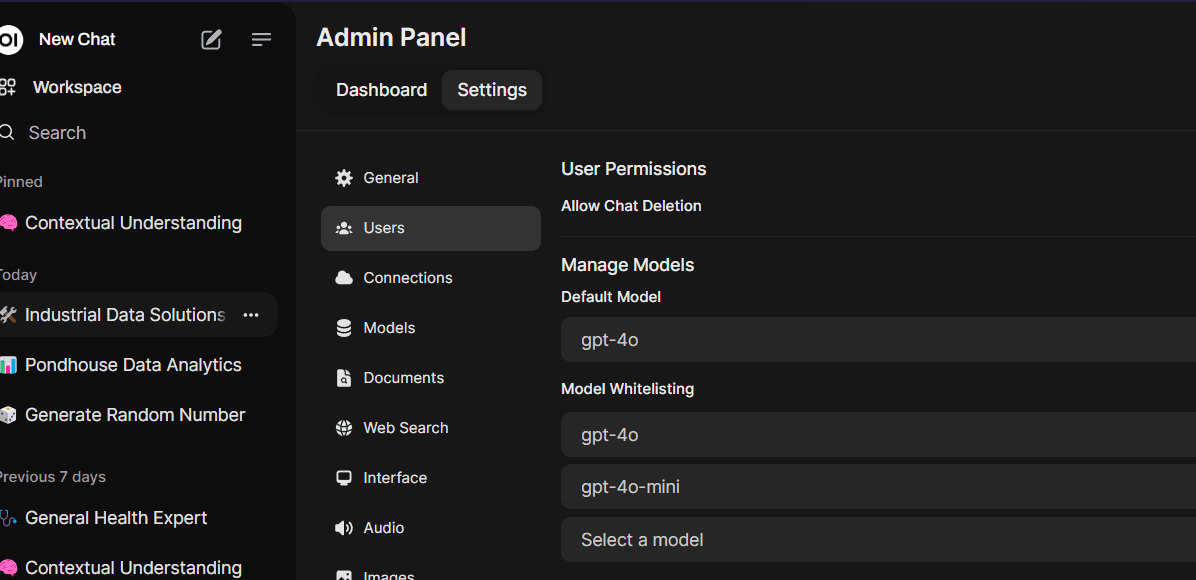

Model whitelisting

Besides changing the model, you can whitelist which models are available for your users.

Model whitelisting

Model whitelisting

Model pulling

If you want to run a model which is currently not downloaded on your server, Open WebUI will automatically download it for you.

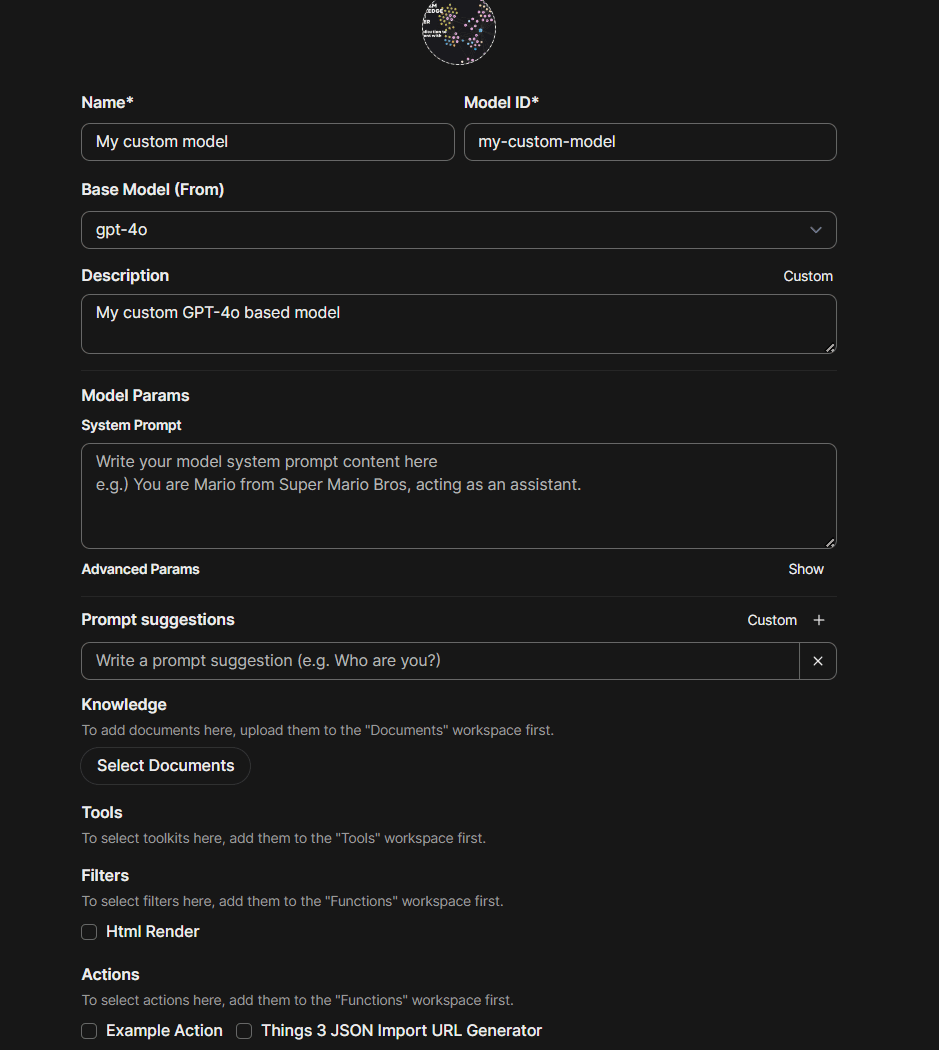

Creating custom "models"

Custom models in Open WebUI are very similar to OpenAI's custom GPTs. They are simply models with a specific system prompt, prompt suggestions, knowledge (files) and tools.

Custom models

Custom models

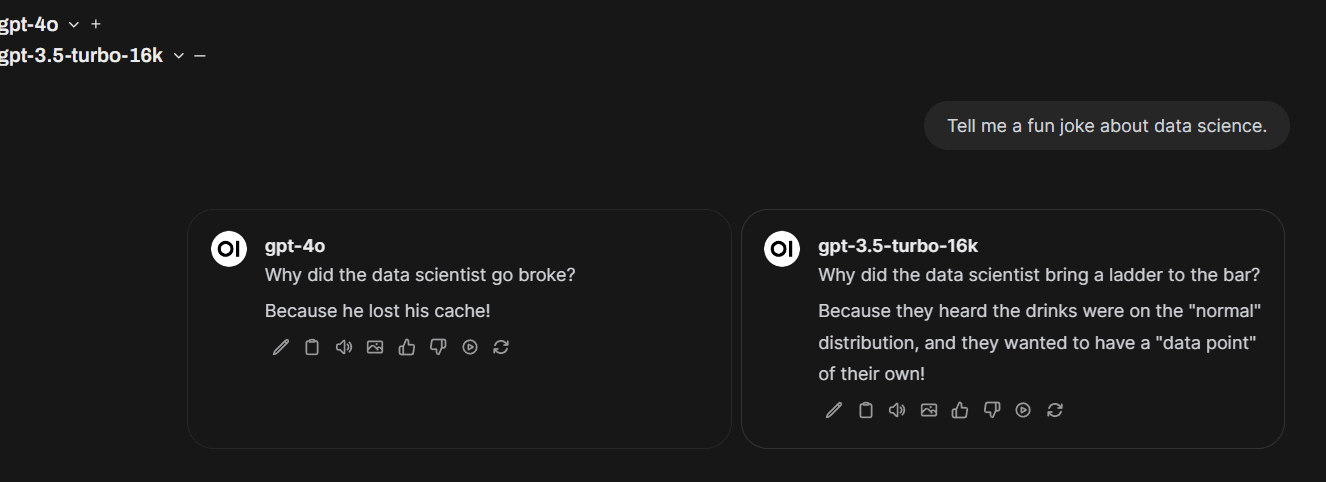

Side-by-side model comparison

As we have the possibility to run different models in our chat interface, wouldn't it be nice to compare the output of different models to find the one which best fits our use case?

Well, it would be nice, and with Open WebUI it is nice. Simply select two three or more models, enter your search prompt and the tool will run the same prompt in parallel for all the selected models.

Note: There is a hidden use case there: Did you also experience that LLM outputs vary drastically from run to run? So you create a prompt, run it, the output is good, you are happy. Just to find 2 runs later, that the output is total crap? Using the model comparison feature, you can also compare the same model multiple times. Just add the same model eg. 5 times and Open WebUI will run the prompt 5 times in parallel.

Model comparison

Model comparison

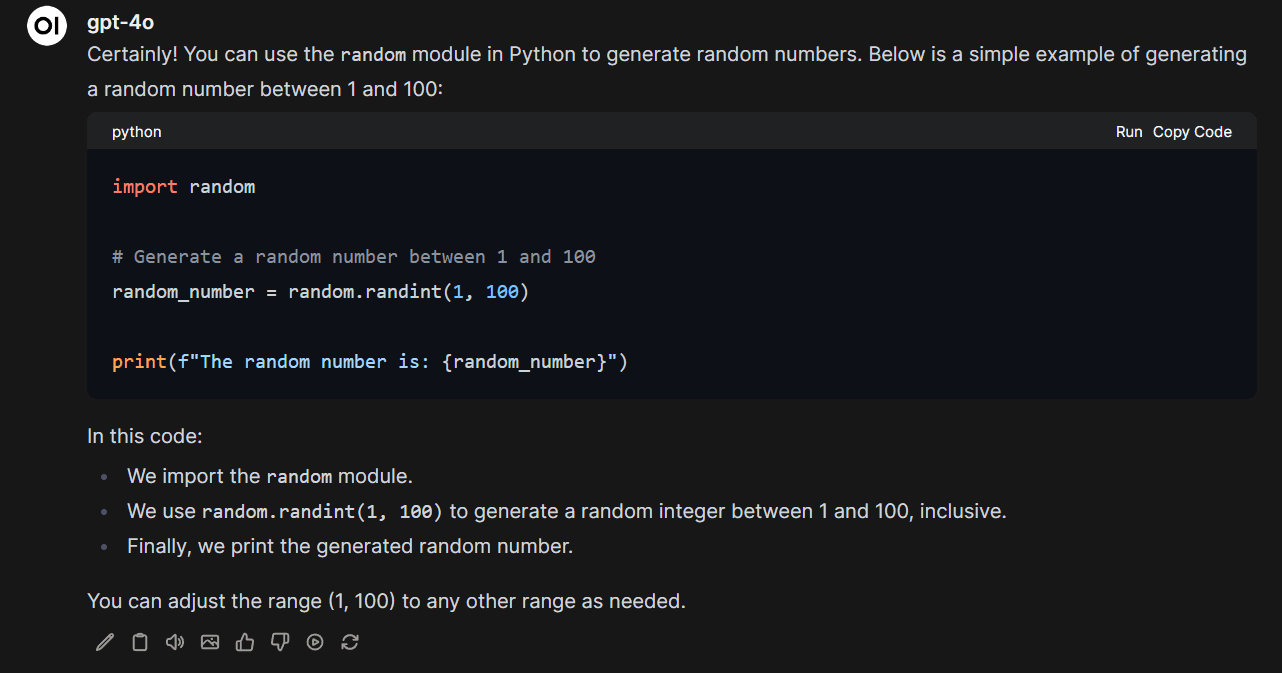

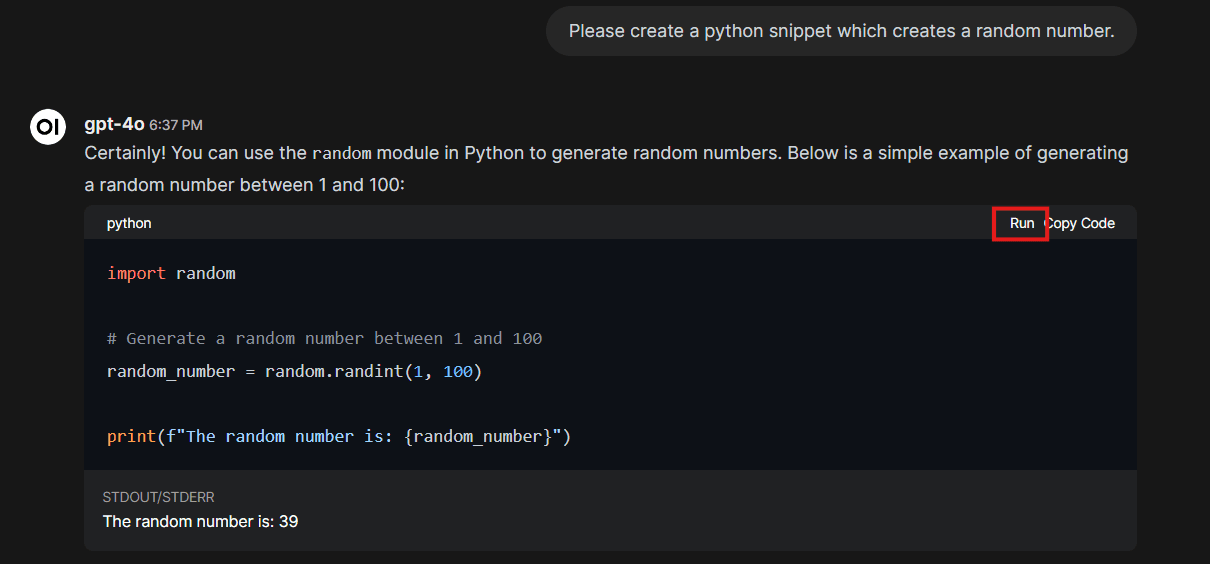

Run Python code directly in your browser

This one is a favorite of ours. I guess most of us have created some sort of python snippets with their chat bot. With Open WebUI you can run these snippets directly in the browser, using Pyodide. No more copy-pasting python code to your local machine, starting a virtual environment and running the code. Just click on "run".

Python code execution

Python code execution

Set Open WebUI as your default browser search engine

The browser URL bar acts as interface to the search engine of our choice. With some simple steps, on can set Open WebUI to be the default search engine for that.

Once the search engine is set up, you can perform searches directly from the address bar. Type your search query in the browsers address bar and you'll be redirected to Open WebUI with the search results.

Authentication options

By default, Open WebUI offers a simple username/password authentication system.

Note: As of time of this writing, there is no email validation, multi factor authentication and forgot password functionality. Therefore, we can't recommend using the default authentication.

However, they also offer OAUTH2 support to implement SSO sign-in with:

- Microsoft

- Generic OIDC provider

And for the most flexible solution, Open WebUI integrates with oauth2-proxy - which is a superb authentication proxy. It supports a wide range of authentication options and is quite easy to set up. It is put "in front of" Open WebUI. Open WebUI redirects authentication requests to the proxy, which then handles the OAUTH2 flow.

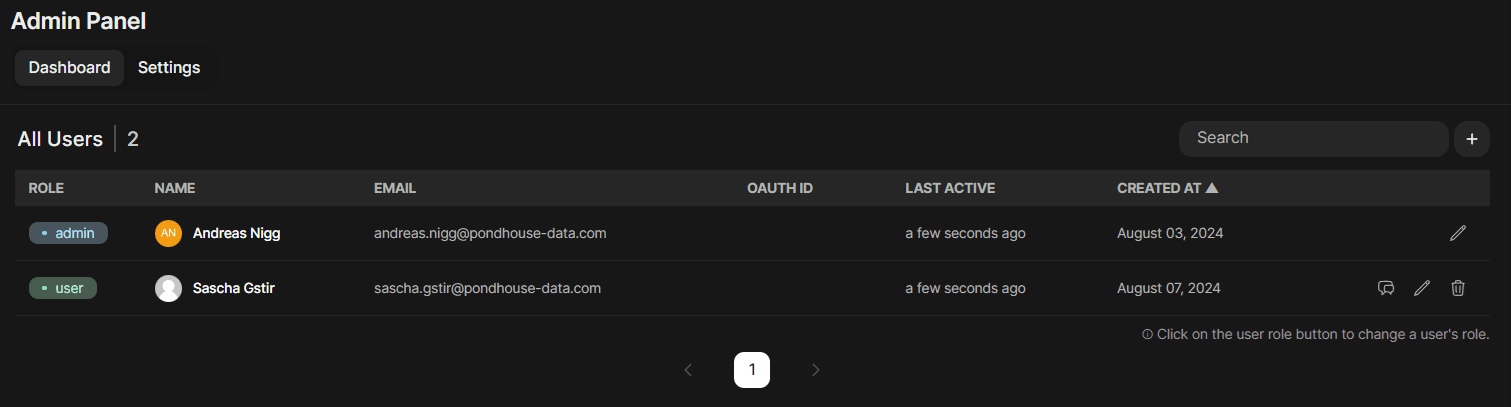

User Management and Admin Panel

As you might have guessed by now, we are rather big fans of Open WebUI. However there is one area which is currently lacking: User Management and general administration. Let's first look at what is offered and then talk about what we'd like to see in the future.

First, they offer a basic user management panel, allowing to set user roles (either "admin" or "user").

User management

User management

After signing up, users are put in pending state and admins can approve them. Alternatively, in the admin panel, one can set the default role to "user", circumventing the approval process.

That's it. No more user management features, no user statistics, no groups are advanced roles.

Note: The Open WebUI Roadmap also recognizes this as a shortcoming and promises to improve this area. However, at the moment user management is an area which can get cumbersome quickly.

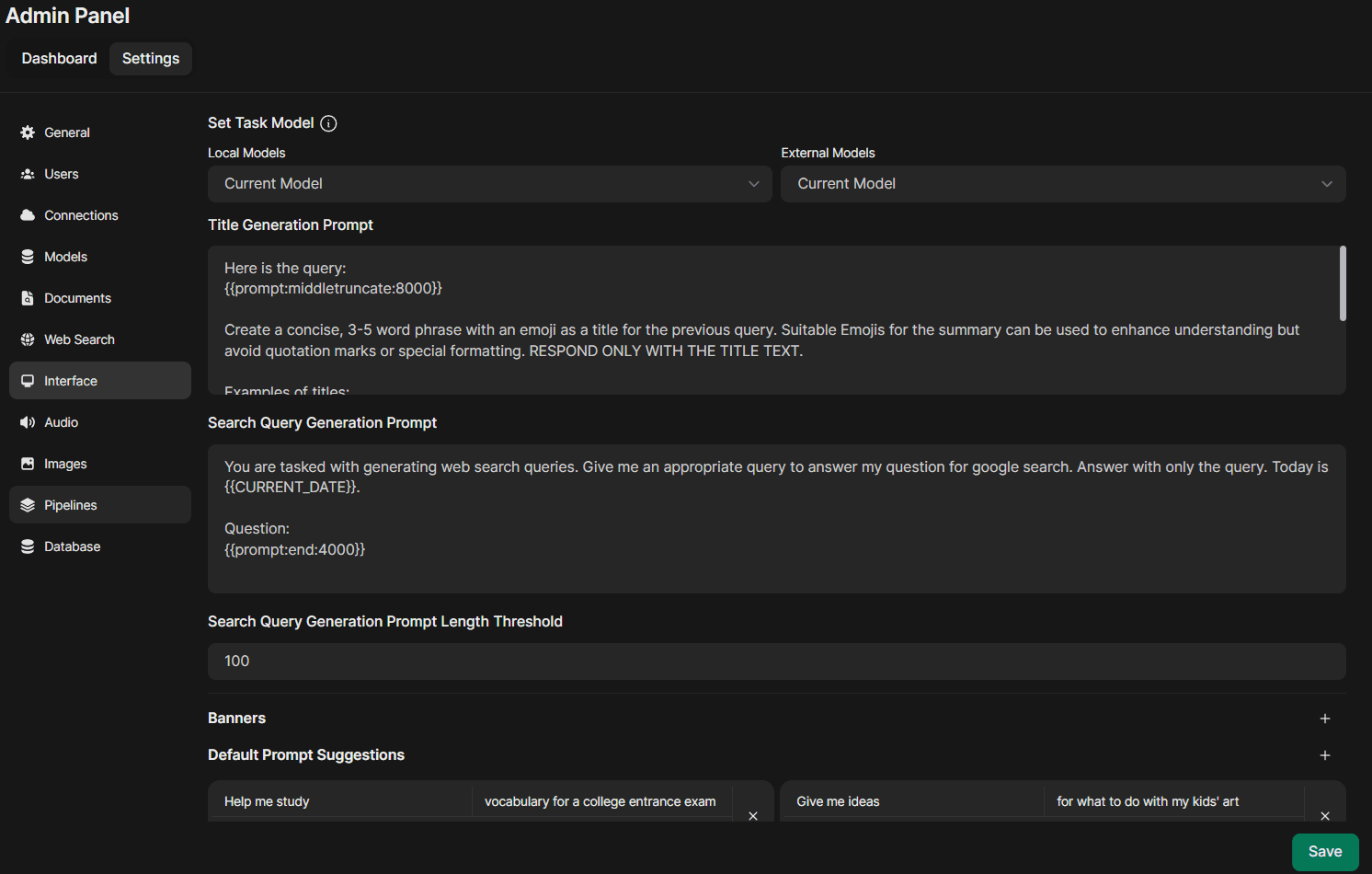

Secondly, there is a very generous "admin settings" panel, allowing to configure all sorts of options for the platform. This is certainly very nice.

Admin settings

Admin settings

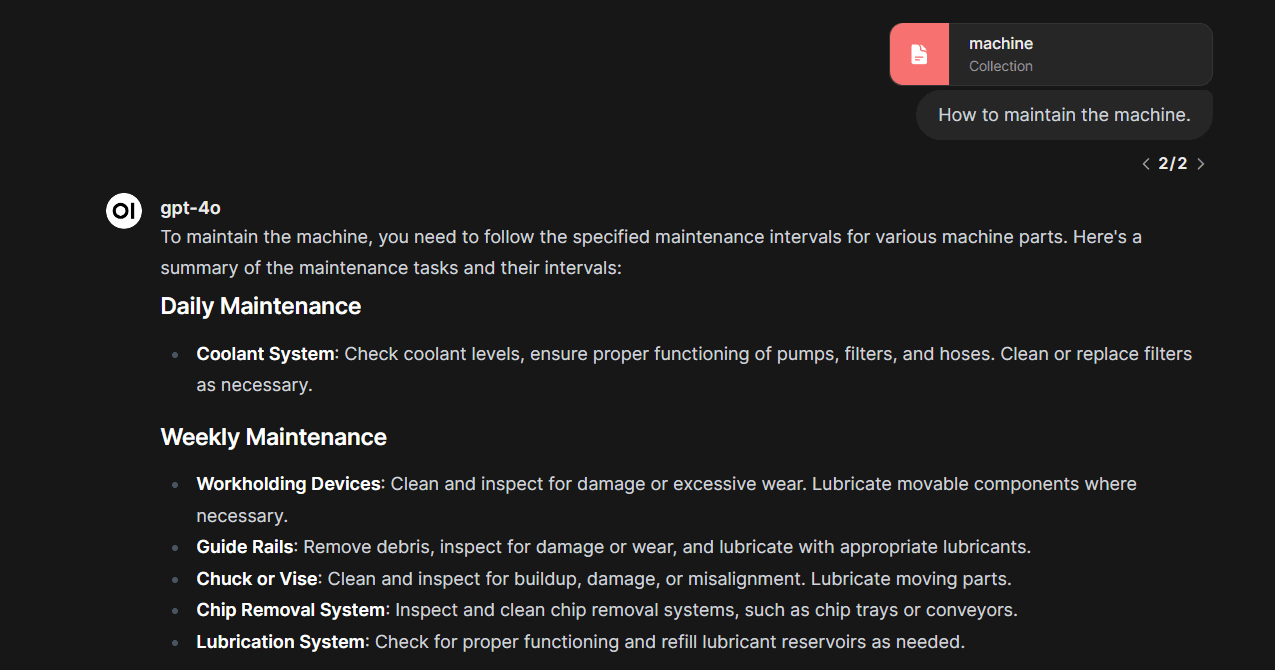

Using Open WebUI with Retrieval Augmented Generation (RAG)

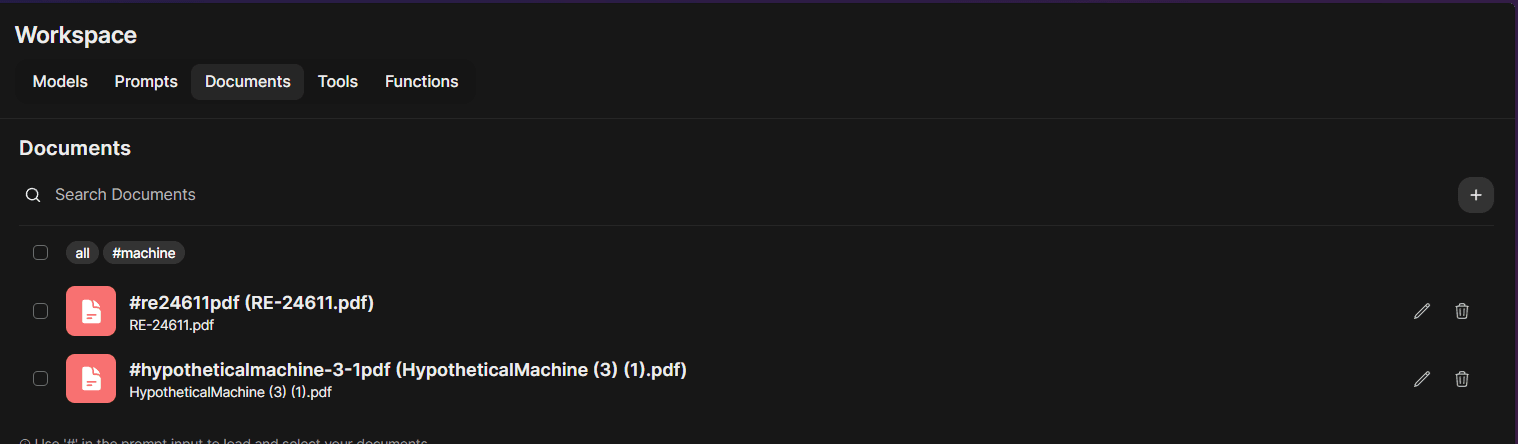

Retrieval Augmented Generation is one of the most productive areas of LLMs at the moment. In simple terms, it allows to provide LLMs additional knowledge by uploading documents.

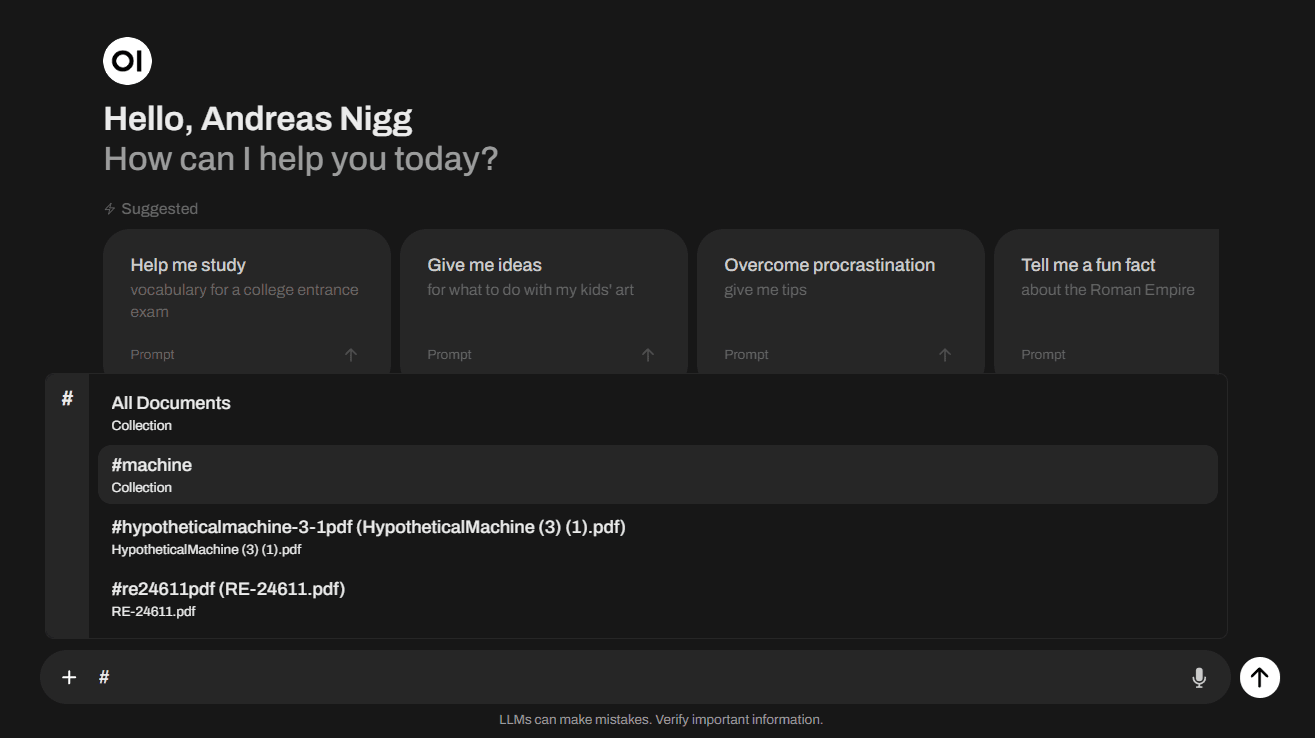

Open WebUI provides an integrated, simple retrieval augmented generation process, which allows to chat with any documents. They even provide quite an interesting "tag" - system, allowing to group/tag documents and search within all documents of a tag.

The huge advantage: This works out of the box and it's astonishing, how productive it is.

The drawback: It's not really scalable and the retrieval pipeline is quite simple. So it works well for a short amount of documents, I'd say up to twenty. And it also lacks in retrieval quality when the documents get more technical.

That being said, the RAG integration is a huge time saver and a great feature for many, many use cases to come.

Upload form for documents

Upload form for documents

By typing "#" as the first character in the chat input field, you can select the document(s) to use for the prompt.

Selecting a document

Selecting a document

Below you can find a real example, where the LLM in use found the answer to my question in the selected document collection:

Using RAG with Open WebUI

Using RAG with Open WebUI

What are Open WebUI Pipelines?

So, we've covered a lot of features of Open WebUI - but we kept the best for the end: Pipelines.

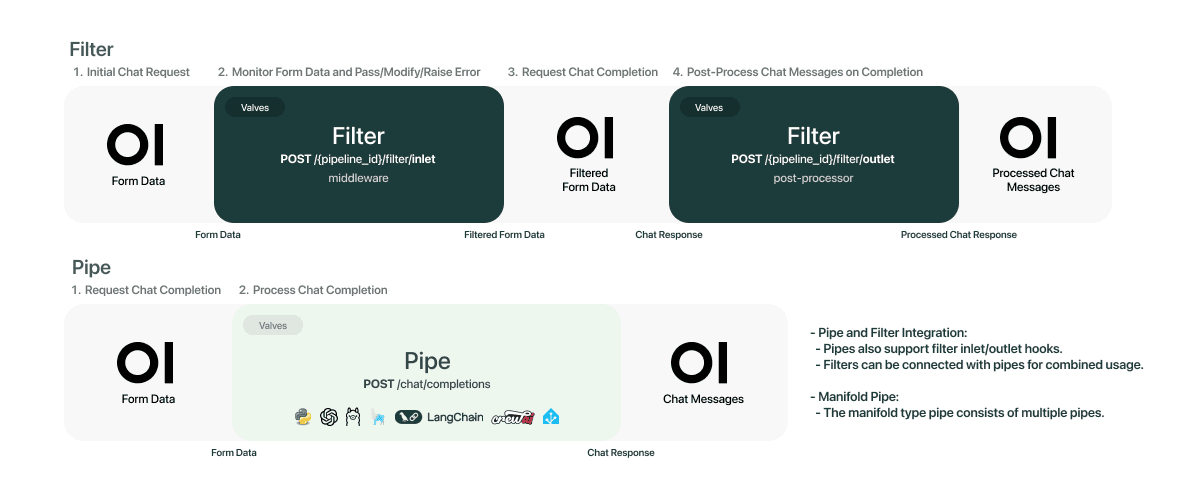

Pipelines are a way to extend Open WebUIs native capabilities. They are generic Python scripts you can connect between your Open WebUI instance ant the LLM in use. So each time a user sends a chat message, it is passed to the pipeline, then the LLM. And even better, also the answer of the LLM is passed to the pipeline.

Pipeline overview

Pipeline overview

There are currently 4 main types of pipeline objects:

-

Filters: Filters are used to perform actions against incoming user messages and outgoing assistant (LLM) messages. Potential actions that can be taken in a filter include sending messages to monitoring platforms (such as Langfuse or DataDog), modifying message contents, blocking toxic messages, translating messages to another language, or rate limiting messages from certain users

-

Pipes: Pipes are functions that can be used to perform actions prior to returning LLM messages to the user. Examples of potential actions you can take with Pipes are Retrieval Augmented Generation (RAG), sending requests to non-OpenAI LLM providers (such as Anthropic, Azure OpenAI, or Google), or executing functions right in your web UI. Pipes can be hosted as a Function or on a separate Pipelines server. (Just imagine the possibilities here! Pipes basically get the users requests, you can do whatever you are capable of doing with python, and send the answer back to the user).

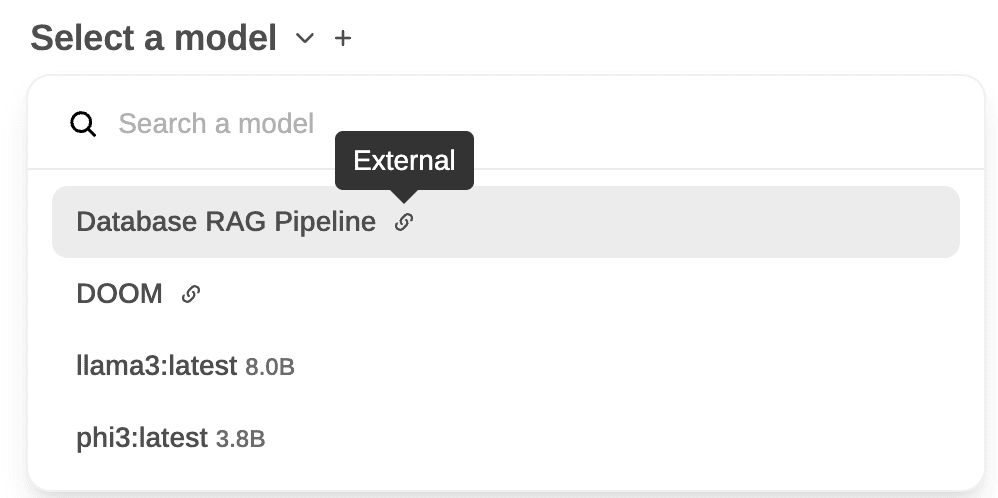

Pipes simply act as "custom models" and user can select them via model selection dropdown. If one wants to use a pipe, they simply have to select it and Open WebUI will route any traffic through the pipe.

Pipe selection (taken from the Open

WebUIdocs)

Pipe selection (taken from the Open

WebUIdocs)There is some confusion in the Open WebUI documentation about functions, pipes and pipelines. Pipelines is the overall concept of connecting custom stuff with your LLM. Functions is your custom code which is connected between the user and the LLM. Functions are executed on the Open WebUI server. Pipes are basically the same as functions, but they run on a separate pipeline server and therefore are more powerful (as they can have their own server infrastructure).

-

Tools: Tools are known from many other LLM platforms. They are simple functions which the LLM can decide to call or not. That's the difference to functions and pipes. Functions and pipes are always executed, tools however are sent to the LLM as sort of option. If the LLM needs a tool for a specific task, it is then executed.

-

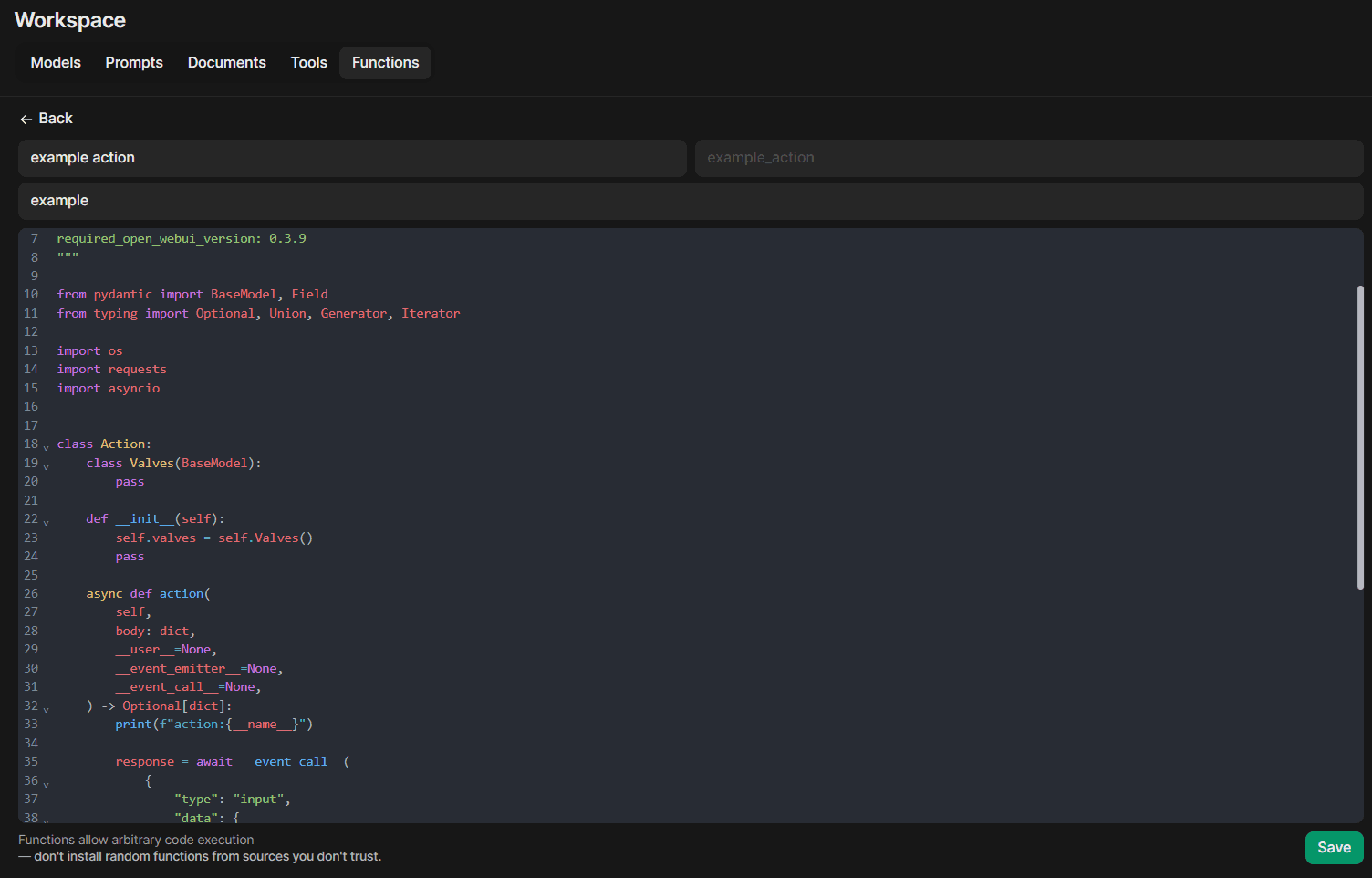

Actions: Action functions allow you to write custom buttons to the message toolbar for end users to interact with. This sounds quite mindb-lowing to us. Therefore, I'll repeat again: Actions allow you create a custom button which is then placed at each answer of the LLM. Why'd you want to do this? One absolute gorgeous example is shown in the gif below (taken from the Open WebUI documentation). They place a button "Create visualization" at the answer and when clicked, they render a full-fletched charting environment. Absolutely stunning.

Action example

Action example

How do you create a pipeline object? Open WebUI conveniently provides a code editor as part of their offering. Simply use the admin panel, add your python code and you are good to go. Note that this is true for any of the objects above, except for pipes, as they run on their own server. We'll provide a more detailed guide on how to create pipelines in the future. However for the other objects, the process is quite simple:

Creating a pipeline object

Creating a pipeline object

And last but certainly not least: There is an official Open WebUI community hub, showcasing several great pipelines created by the community. You can simply take one of them or use them as starting point for your own extensions.

Conclusion

In conclusion, Open WebUI presents itself as a noteworthy alternative to ChatGPT, offering a combination of features that cater to users seeking more control over their AI interactions. Its open-source nature and self-hosted approach address key issues around data privacy and customization that many organizations and individuals prioritize.

The platform's support for multiple models, integration of RAG capabilities, and innovative features like browser-based Python execution are superb and offer many hours of joy. The pipeline system, in particular, offers significant flexibility for extending the platform's functionality.

However, it's important to note that Open WebUI is not without its limitations. Areas such as user management could benefit from further development, and some workflows may require refinement. However, looking at the roadmap as well as the github commit frequency, we are quite confident, that these areas will be improved in weeks, not months.

For those prioritizing data control, avoiding vendor lock-in, or requiring specific customizations, Open WebUI offers a viable solution. Its ability to work with various models and APIs provides users with options to set up the system to their requirements. And the incredible pipeline system makes Open WebUI the most flexible LLM platform as of now.

Further reading

- Extract and parse documents using LLMs

- Fine-tune GPT-4o mini

- How to host your own LLM - including HTTPS?

Interested in building high-quality AI agent systems?

We prepared a comprehensive guide based on cutting-edge research for how to build robust, reliable AI agent systems that actually work in production. This guide covers:

- Understanding the 14 systematic failure modes in multi-agent systems

- Evidence-based best practices for agent design

- Structured communication protocols and verification mechanisms